-

Posts

7,282 -

Joined

-

Last visited

-

Days Won

2,416

Content Type

Forums

Blogs

Events

Resources

Downloads

Gallery

Store

Everything posted by allheart55 Cindy E

-

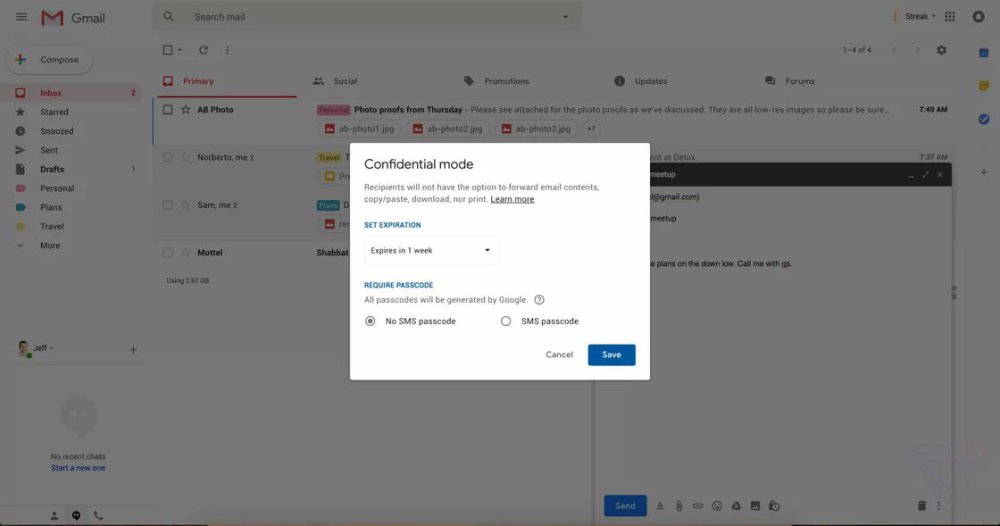

Gmail’s web client is set to receive one of its most substantial overhauls ever in the next few weeks, but Google has yet to publicly announce the update. But that hasn’t stopped The Verge from sharing details about the new design all week, including a new report today covering an interesting upcoming feature called “confidential mode.” In addition to smart replies, a snooze feature and a sidebar for calendar appointments, the new web version of Gmail will also include a confidential mode that will allow users to either stop recipients from forwarding an email you send them, or restrict them from copying, downloading or printing out the contents of the email. Google is also going to allow Gmail users to require an SMS passcode to open an email and even set an expiration date on emails they send. All of these features will allow Gmail users to have more control over who sees their emails and how the content of those emails is distributed. Of course, this won’t stop anyone from copy and pasting the text or snapping a screenshot, but it is an effective way to warn a recipient about the sensitive nature of an email. Google confirmed the existence of the design overhaul in a statement to The Verge, saying that it is “working on some major updates to Gmail (they’re still in draft phase).” And while the new Gmail won’t be ready for at least another few weeks, it looks like there are plenty of new features to get excited about. Source: Yahoo

-

- confidential mode

-

(and 1 more)

Tagged with:

-

Mark Zuckerberg, CEO of supposed surveillance titan Facebook, has apparently never heard of shadow profiles. Of all the things learned during Zuckerberg’s questioning by a succession of politicians in Congress this week, for privacy campaigners this was one of the most unexpected. We have Congressman Ben Luján to thank for a discovery that might come to hang around Zuckerberg as he battles to save his company’s image. After asking Zuckerberg about the company’s practice of profiling people who had never signed up for the service, said Luján: So, these are called shadow profiles – is that what they’ve been referred to by some? Replied Zuckerberg: Congressman, I’m not, I’m not familiar with that. For anyone unsure of its meaning, shadow profiles are the data Facebook collects on people who don’t have Facebook accounts. Zuckerberg’s ignorance was presumably limited to the term and its usage rather than the concept itself, since Facebook offers non-members the ability to request their personal data. It seems that all web users are of interest to Facebook for security and advertising. During the exchange Zuckerberg explained that Facebook needs to know when two or more visits come from the same non-user in order to prevent scraping: …in general we collect data on people who have not signed up for Facebook for security purposes to prevent the kind of scraping you were just referring to … we need to know when someone is repeatedly trying to access our services A little later he implied that non-users are also subject to data gathering for targeted advertising: Anyone can turn off and opt out of any data collection for ads, whether they use our services or not You can opt of targeted advertising by Facebook and a plethora of other advertisers using the Digital Advertising Alliance’s Consumer Choice Tool or by blocking tracking cookies with browser plugins. While not in widespread public use, the term shadow profiles has been kicking around privacy circles for some time as a big deal. In 2011, a Irish privacy group sent a complaint about shadow profiling – collecting data including but not limited to email addresses, names, telephone numbers, addresses and work information – from non-members. More recently, in the latest instalment in a long-running privacy case, a Belgian court ordered Facebook to stop profiling non-members in the country or face a daily fine. The problem of shadow profiles for Zuckerberg is that it blows a hole in some of the arguments he has used to defend the way Facebook collects data on web users, not least that it’s all about security. But what about the large number of people who encounter Facebook somewhere and aren’t scraping anything? This includes non-members who encounter it through the ubiquitous ‘like’ button, or by downloading Facebook-connected apps such as WhatsApp or Instagram. [/url] On top of that are technologies such as Facebook Pixel, a web targeting system embedded on lots of third-party sites, that the company has in the past trumpeted as a clever way to serve people (including non-members) targeted ads. As Luján pointed out, non-members won’t have signed a privacy consent form, nor would they know to delete data they weren’t even aware was being collected. Ironically, one of the ways the world has learned of the way Facebook collects and analyses non-members was through data breaches such as the one that hit the company in 2013. A journalist at the time summed it up rather well: You might never join Facebook, but a zombie you – sewn together from scattered bits of your personal data – is still sitting there in sort-of-stasis on its servers waiting to be properly animated if you do sign up for the service. So, not having a Facebook account is not an effective way to avoid its data harvesting. Facebook is always watching, analysing and learning, even when it is nowhere to be seen. But are they the only one? With just about everyone’s online business models dependent on extensive data gathering and targeted advertising, perhaps Zuckerberg might console himself with the thought that he likely won’t be the last tech executive hauled up and asked questions about this topic. Source: Sophos

-

- mark zuckerberg

-

(and 1 more)

Tagged with:

-

-

After Tuesday’s nearly five-hour grilling in the Senate – more of a light sautéing, really – Facebook CEO Mark Zuckerberg on Wednesday gave Congress another five hours of his life: this time, before the House Energy and Commerce Committee. Representatives’ questions again hit on Tuesday’s themes: data privacy and the Cambridge Analytica (CA) data-scraping fiasco, election security, Facebook’s role in society, censorship of conservative voices, regulation, Facebook’s impenetrable privacy policy, racial discrimination in housing ads, and what the heck Facebook is: a media company (it pays for content creation)? A financial institution (think about people paying each other with Facebook’s Venmo)? Zuck’s take on what Facebook has evolved into: “I consider Facebook a technology company. The main thing we do is write code. We do pay to help produce content. We also build planes to help connect people, but I don’t consider ourselves to be an aerospace company.” (Think of Facebook’s flying ISPs.) When he hears people ask whether Facebook is a media company, the CEO said that what he really hears is whether the company has, or should have, responsibility over published content – be it fake news meant to sway elections, hate speech, or Russian bots doing bot badness. His answer has evolved: for years, he’s been pushing back against fears about fake news on Facebook. The company just builds the tools and then steps back, he’s repeatedly said, insisting that platform doesn’t bear any of the responsibilities of a publisher for verifying information. Zuck still considers Facebook to primarily be a technology company, but for two days of testimony he’s acknowledged that it’s been slow to accept responsibility when people do bad things with its tools. Overall, the tone of the questioning was a lot tougher than it was in the Senate. Zuckerberg didn’t budge from his script, though. For example, Rep. Frank Pallone tried to nail Zuck down on making a commitment to changing all user default settings so as to minimize, “to the greatest extent possible,” the collection and use of data. One-word answer, please: yes or no? Zuck demurred: “That’s a complex issue,” he said. “and it deserves more than a one-word answer.” Pallone came back with a zinger having to do with how Facebook has passed the buck when it comes to protecting users’ data from being scraped and used to do things like target voters with political ads: You said yesterday that each of us owns the data we put on Facebook. Every user is in control. But we know the problems with CA. How can Facebook users have control over their data when Facebook itself doesn’t? OUCH! Yea, what he said! Zuck’s I-Am-Teflon response: “We have the ability for people to sign into apps and bring their data with them.” That means you can have, for example, a calendar that shows friends’ birthdays, or a map that shows friends’ addresses. But to do that, you need access to your friends’ data as well as bringing in your own to an app. Facebook has now limited such app access so that people can only bring in their own data, he said. OK… but that’s not really an answer. Of course, during his two days of testimony, Zuckerberg repeatedly explained that users have control over everything they post. There’s that little drop down, Zuckerberg explained many, many times, that lets you choose who’s going to view your content – the public at large? Just friends? Groups? Just one or two people? It’s up to you! True, privacy policies are tough to read, and that’s why Facebook tries to stick privacy into the stream of things. Like, say, those little drop-down arrows allowing you to choose who sees what… and did he mention those little drop-down arrows that let you choose the audience for a post? Maybe once or twice. And yes, Zuck said, Facebook is working on making it easier for users to get to privacy settings. For example, after CA blew up last month, Facebook pledged to reach into the 20 or so dusty corners where it’s tucked away privacy and security settings and pull them into a centralized spot for users to more easily find and edit whatever data it’s got on them. Assorted other bees in the House’s bonnet included: Diamond and Silk. Diamond and Silk. Diamond and Silk. What in the world is this “Diamond and Silk” that conservative lawmakers have repeatedly asked about during the two days of Zuck’s testimony? For those of us who aren’t familiar, it’s not a luxury brand: they’re two pro-Trump vloggers, Lynnette “Diamond” Hardaway and Rochelle “Silk” Richardson, who’ve claimed that Facebook has censored them as spreading “unsafe” content. On Wednesday, Rep. Joe Barton started his questioning by reading a request he got from a constituent: “Please ask Mr. Zuckerberg, why is Facebook censoring conservative voices?” He said he’d received “dozens” of similar queries through Facebook. Zuckerberg: “Congressman, in that specific case, our team made an enforcement error and we have already gotten in touch with them to reverse it.” Sen. Ted Cruz also brought up the vloggers during a heated exchange with Zuckerberg on Tuesday, citing them as an example of what he said is Facebook’s “pattern of bias and political censorship.” Diamond and Silk were also brought up by Rep. Fred Upton and Rep. Marsha Blackburn, the latter of whom also asked if Facebook “subjectively manipulate algorithms to prioritize or censor speech?” When Zuck began his response by referencing hate speech and terrorist material – “the types of content we all agree we don’t want on the service” and which are automatically identified and banned from the platform – Blackburn angrily interrupted him, exclaiming: Let me tell you something right now. Diamond and Silk is not terrorism! What’s the difference between Facebook and J. Edgar Hoover? Over the course of two days, both branches of Congress wondered whether Facebook is a surveillance outfit. Does it listen to our conversations? One seed of that worry was planted when many users, post-CA, requested their data archives from Facebook, only to find that the platform logs calls and texts… with permission, Facebook stressed and which apparently went in many ears and right back out. Rep. Bobby Rush asked Zuckerberg what the difference is between Facebook and a 1960s program wherein the government, through the FBI and local police, conducted a counterintelligence program to track and share information about civil rights activists, including their religious and political ideology. He himself was a “personal victim” of the program. “Your organization is similar,” Rush said. “You’re truncating basic rights … including the right to privacy. What’s the difference between Facebook’s methodology and the methodology of American political pariah J. Edgar Hoover?” Zuck said the difference between surveillance and what Facebook does is that on Facebook, you have control over your information. “You put it there. You can take it down anytime. I know of no surveillance organization that gives people that option.” Mark, you’ve had a tough few days. I hate to make your week even more arduous. But puh-LEEEZ. Come on. Everybody knows that Facebook tracks us across the web, even when we leave the platform. It’s been doing it for years. One tool among many to do so is Facebook Pixel, a tiny, transparent image file the size of just one of the millions of pixels on a typical computer screen. No user would ever notice the microscopic snippet, but requests sent by web pages to get one are packed with information. No, we don’t have that much control over our information. But Mark Zuckerberg has demonstrated masterful control when it comes to staying on message. Source: Sophos

- 1 reply

-

- cambridge analytica

- congress

-

(and 2 more)

Tagged with:

-

-

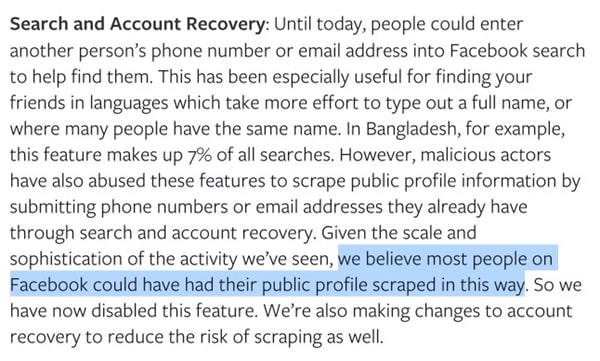

Facebook ignored a widely-known privacy flaw for years, allowing scammers, spammers, and other malicious parties to scoop up virtually all users’ names and profile details. As I explained way back in 2012, when I was writing for the Sophos Naked Security blog, simply entering someone’s phone number or email address into Facebook’s search box would perform a reverse look-up and tell you who it belonged to, with any information they shared publicly on their Facebook profile. Facebook had set the default setting for “Who can look you up using the email address or phone number you provided?” to “Everybody”. Which, of course, was the weakest possible privacy: no privacy at all. Facebook knew that most people would never bother to change the setting, and at the same time pressured users to enter a phone number when creating an account or during verification. Three years passed, and a software developer wrote just a few lines of code which automatically cycled through every possible mobile number in the UK, United States, and Canada, scooping up users’ names, photos, and other data. That kind of information could be pretty useful for a scammer. For instance, they could phone you up pretending to be your mobile phone company, and refer to you by your name to appear more convincing. Facebook didn’t stop the developer’s code from accessing hundreds of millions of its users’ profiles. What they did do is tell him that they didn’t consider it an issue. Another three years have passed, and Facebook is finding itself in hot water after the Cambridge Analytica debacle. With its share price slammed by allegations that its business model is not taking users’ privacy seriously, Facebook published a blog this week detailing some of the changes it was making. Finally, Facebook is acknowledging that offering a reverse look-up based on phone numbers and email addresses is disastrous, and says it is disabling the feature. But more than that, it is admitting that “most people on Facebook could have had their public profile scraped in this way.” Anyone who didn’t change their privacy settings after adding their phone number should assume that their information had been harvested. Facebook chief Mark Zuckerberg acknowledged the scale of the problem in a Q&A with journalists: “I certainly think that it is reasonable to expect that if you had that setting turned on, that at some point during the last several years, someone has probably accessed your public information in this way.” How long is it going to take before people wake up to what’s going on here? Facebook’s business model is no secret, and is fundamentally incompatible with a growing number of people’s desire for online privacy. Even when told about serious problems Facebook ignored them. Source: Graham Cluley

-

- privacy flaw

-

(and 2 more)

Tagged with:

-

Yikes, Facebook CEO Mark Zuckerberg said in prepared remarks for a rare joint hearing of the Senate Judiciary and Commerce Committees on Tuesday and Wednesday: malefactors have used reverse-lookup “to link people’s public Facebook information to a phone number”! Quelle surprise, according to Zuckerberg’s prepared remarks: Facebook only discovered the incidents a few weeks ago, they claim, and immediately shut down the phone number/email lookup feature that let it happen. Zuckerberg’s remarks: When we found out about the abuse, we shut this feature down. And thus, to borrow the Daily Beast’s phrasing, Zuckerberg gaslighted Congress before the hearings even started. On Tuesday, senators were ready, though, to grill the virgin-to-Congressional-grilling about that “Well, shucks, we just found out” bit. Sen. Dianne Feinstein was the first to jump in with the fact that Facebook learned about Cambridge Analytica’s (CA’s) misuse of data in 2015 but didn’t take significant steps to address it until the past few weeks. Zuckerberg’s response, reiterated many times during five hours of testimony: We goofed. CA told us it deleted the data. We believed them. We shouldn’t have. It won’t happen again. Sen. Chuck Grassley asked the CEO if Facebook has ever conducted audits to ensure deletion of inappropriately transferred data (it seemed to have an audit allergy, at least during whistleblower Sandy Parakilas’s tenure), and if so, how many times? My people will get back to you on that, Zuckerberg said… Many times, to many questions. But with regards to app developers’ handling of user data, Facebook will do better, he promised: It will take a more proactive approach to vetting how app developers handle user data, will do spot checks, and will boost the number of audits. It was the email/phone lookup feature that data-analytics firm CA – one of the multiple rocket thrusters that pushed Zuckerberg into getting this call from Congress – used to scrape users’ public profile information. In CA’s case, we’re talking about profile information of 87 million users – that would be “most people on Facebook”, according to Facebook – who were subjected to data harvesting without their permission. In response to CA (and Russia, and bot, and fake news) outrage, Tuesday’s testimony was the same litany of apologies and pledges to do better that Facebook’s been singing since its founding, 14 years ago. That’s 14 years of moving fast and breaking things, including any notion that it might choose to protect users from its customers. Wired has called it the “14-year apology tour.” The more things change, the more things stay the same. Wired: In 2003, one year before Facebook was founded, a website called Facemash began nonconsensually scraping pictures of students at Harvard from the school’s intranet and asking users to rate their hotness. Obviously, it caused an outcry. The website’s developer quickly proffered an apology. ‘I hope you understand, this is not how I meant for things to go, and I apologize for any harm done as a result of my neglect to consider how quickly the site would spread and its consequences thereafter,’ wrote a young Mark Zuckerberg. ‘I definitely see how my intentions could be seen in the wrong light.’ Tuesday’s testimony was more of the same, on the topics of CA and other Facebook app developers’ use and abuse of Facebook users’ data, on the topic of how Facebook could possibly have been unaware of what Russian actors were up to when using the platform to tinker with the 2016 US presidential election, on Russian bots spreading discord and fake news. It wasn’t so much a grilling. It was more of a golden toasting. Much of this had to do with the fact that some senators proved themselves to be fairly clueless about the intricacies of technology and online business models. An example: Sen. Bill Nelson rambled on for a bit about posting about dark chocolate and suddenly having ads for dark chocolate pop up on Facebook. Could it be that Facebook might, as COO Sheryl Sandberg suggested on the Today show, charge people to not see ads about dark chocolate?! The idea of being charged for Facebook’s “free” services really must have resonated. An exchange between Sen. Orrin Hatch and Zuckerberg: Hatch: ‘How do you sustain a business model in which users don’t pay for your service?’ Zuckerberg: ‘Senator, we run ads.’ Not all senators proved out of their depth. As CNN notes, Sen. Lindsey Graham was “smart and informed.” The same goes for Sen. Brian Schatz, who nailed Zuckerberg down on what it means when Facebook claims that every user “owns” his or her own information. Sen. Chris Coons highlighted the problems inherent in Facebook’s ad targeting: What if a diet pill manufacturer was able to target teenagers struggling with bulimia or anorexia? But Zuckerberg stuck to a strict script. He likely made his coaches proud. He had, in fact, been coached like a politician getting ready for a televised debate. According to the New York Times, Zuck’s been undergoing “a crash course in humility and charm,” including mock interrogations from his staff and outside consultants. More takeaways from Tuesday’s testimony: Facebook is open to the “right” regulation. Sen. Maggie Hassan: Will you commit to working with Congress to develop ways of protecting constituents, even if it means laws that adjust your business model? Zuck: Yes. Our position is not that regulation is wrong. [Facebook just wants to make sure it’s the “right” regulation.] Cambridge University professor Aleksandr Kogan shared user data with other firms besides CA. Sen. Tammy Baldwin asks whether Kogan sold the data to anyone besides Cambridge Analytica? Zuck: Yes, he did. He mentioned Eunoia as one of the companies but said there may be others. Not banning CA in 2015 was “a mistake.” Zuck corrected an earlier statement: CA was, actually, an advertiser in 2015, so Facebook could have banned the firm when it first learned of its data scraping. Zuck says not doing so was a “mistake”. Where does Facebook go from here? As New Yorker writer Anna Wiener noted in a roundtable discussion, it’s in a bind: To ‘fix’ Facebook would require a decision on Facebook’s part about whom the company serves. It’s now in the unenviable (if totally self-inflicted) position of protecting its users from its customers. Well, we may not know how Facebook is going to figure that one out, but we know where it’s going today: back to Congress for more of the same. Source: Sophos

-

-

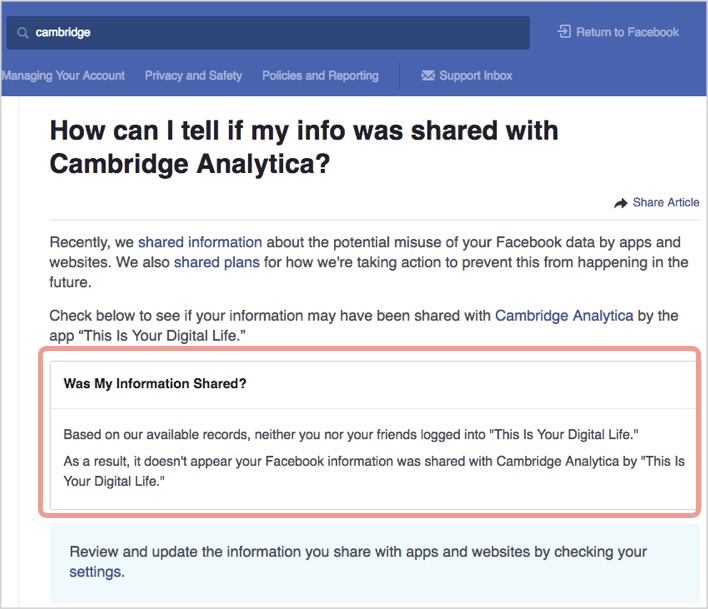

We’re sure you’ve heard of Cambridge Analytica (CA), the controversial company that harvested data from Facebook and then used it in ways that you almost certainly wouldn’t have wanted. About a month ago, we reported how a CA whistleblower named Christopher Wylie claimed that the company had allegedly: …exploited Facebook to harvest millions of people’s profiles. And built models to exploit what we knew about them and target their inner demons. That was the basis the entire company was built on. Were you affected? The thing is that CA didn’t crack passwords, break into accounts, rely on zillions of fake profiles, exploit programming vulnerabilities, or do anything that was technically out of order. Instead, CA persuaded enough people to trust and approve its Facebook app, called “This is Your Digital Life”, that it was able to access, accumulate and allegedly to abuse personal data from millions of users. That’s because the app grabbed permission to access data not only about you, but about your Facebook friends. In other words, if one of your friends installed the app, then they might have shared with CA various information that you’d shared with them. But how to find out which of your friends (some of whom may be ex-friends by now) installed the app, and how to be sure that they remember correctly whether they used the app or not? Facebook has now come up with a way, given that it has logs that show who used the app, and who was friends with them. We used this link: How can I tell if my information was shared with Cambridge Analytica? | Facebook Help Center | Facebook After we’d logged into Facebook, we got the result we hoped for: Phew – we’re OK. Unfortunately, you might not be, but if you don’t yet know, it’s worth finding out, even if only to help you decide how to approach social networks, friending and sharing from now on. What to do? If some of your personal data has fallen into Cambridge Analytica’s hands, there’s nothing much you can do about that now – the horse has already bolted. But it’s still worth locking the stable door, to tighten things up for next time. As Facebook recommends, review and update the information you share with apps and websites via the Facebook settings page. Log into Facebook | Facebook Also, consider how much personal data you want to share with your Facebook friends – and how many friends you want to share it with. Remember: if in doubt, don’t give it out. Source: Sophos

-

- 1

-

-

- cambridge analytica

- data

-

(and 2 more)

Tagged with:

-

I wish all forum software had this feature

allheart55 Cindy E replied to plodr's topic in Tech Help and Discussions

I loved it when Bob gave us this addon. It's a great feature. -

-

-

Revelations that data belonging to as many 87 million Facebook Inc. users and their friends may have been misused became a game changer in the world of data protection as regulators are looking to raise awareness about how to protect information. Revelations that data belonging to as many 87 million Facebook Inc. users and their friends may have been misused became a game changer in the world of data protection as regulators are looking to raise awareness about how to protect information. Elizabeth Denham, the U.K. privacy regulator leading the European investigations into how user data ended up in the hands of consulting firm Cambridge Analytica, will say in a speech Monday that the technology industry and regulators must improve the public’s trust and confidence in how their private information is handled. “The dramatic revelations of the last few weeks can be seen as a game changer in data protection,” Denham, the U.K. Information Commissioner, will say at her agency’s annual conference for data-protection practitioners. “Suddenly, everyone is paying attention.” Denham’s office is combing through evidence it gathered at the offices of Cambridge Analytica during searches last month following reports that the political consulting firm had obtained swathes of data from a researcher who transferred the data without Facebook’s permission. Denham has said that Facebook has been cooperating with her probe, though it’s too soon to say whether the social network’s planned changes will be enough. The ICO has been reviewing the use of data analytics for political purposes since May 2017 and is now investigating 30 organizations, including Facebook, Denham said earlier this month. “Our ongoing investigation into the use of personal data analytics for political purposes by campaigns, parties, social media companies and others will be measured, thorough and independent,” she will say in her prepared remarks. “Only when we reach our conclusions based on the evidence will we decide if enforcement action is warranted.” The remarks come ahead of a meeting of the EU’s 28 data watchdogs in Brussels to discuss the issue and a call between Facebook Chief Operating Officer Sheryl Sandberg and the bloc’s Justice Commissioner Vera Jourova. Denham’s speech comes ahead of a public awareness campaign, called Your Data Matters, which also seeks to restore people’s trust in how data is treated. “The proper use of personal data can achieve remarkable things,” said Denham. “Now, more than ever, the role of data protection practitioner is not just as a guardian of privacy but as an ambassador for the appropriate use of personal data in line with the law.” Source: IT Pro Today

-

-

-

Who are these yo-yos who share fake news on social media? None of your friends, right? Your friends are too smart to fall for cockamamie click bait, and they’re diligent enough to check a source before they share, right? Well, get ready to have the curtain drawn back. These yo-yos may be us. Or, at least, they may turn out to be our friends and/or relatives. In its ongoing fight against fakery, Facebook has started putting some context around the sources of news stories. That includes all news stories: both the sources with good reputations, the junk factories, and the junk-churning bot-armies making money from it. On Wednesday, Facebook announced that it’s adding features to the context it started putting around News Feed publishers and articles last year. You might recall that in March 2017, Facebook started slapping “disputed” flags on what its panel of fact-checkers deemed fishy news. You might also recall that the flags just made things worse. The flags did nothing to stop the spread of fake news, instead only causing traffic to some disputed stories to skyrocket as a backlash to what some groups saw as an attempt to bury “the truth”. In Facebook’s new spin on “putting context” around news and its sources, it’s not relying on fact-checkers. Rather, it’s leaving it up to readers to decide for themselves what to read, what to trust and what to share. At any rate, when it mothballed the “disputed” flags, Facebook noted that those fact-checkers can be sparse in some countries. So this time around, Facebook said, the context is going to include the publisher’s Wikipedia entry, related articles on the same topic, information about how many times the article has been shared on Facebook, where it’s been shared, and an option to follow the publisher’s page. If a publisher doesn’t have a Wikipedia entry, Facebook will indicate that the information is unavailable, “which can also be helpful context,” it said. Facebook is rolling out the feature to all users in the US. If the feature has been turned on for you, you’ll see a little “i” next to the title of a news story. It looks like this: Once you click on that i, you’ll get a popup that shows the Wikipedia entry for the publisher (if available), other articles from the publisher, an option to follow the publisher, a map of where in the world the story has been shared, and, at the bottom, a list of who among your friends has shared it, along with the total number of shares. Like so: Facebook says it’s also starting a test to see if providing information about an article’s author will help people to evaluate the credibility of the article. It says that people in this test will be able to tap an author’s name in Instant Articles to see additional information, including a description from the author’s Wikipedia entry, a button to follow their Page or Profile, and other recent articles they’ve published. The company says that the author information will only display if the publisher has implemented author tags on its website to associate the author’s Page or Profile to the article byline, and the publisher has validated their association to the publisher. The test will start in the US. Source: Sophos

-

- 1

-

-

- click bait

-

(and 1 more)

Tagged with:

-

-

-

Intel revealed that it will not be issuing Spectre patches to a number of older Intel processor families, potentially leaving many customers vulnerable to the security exploit. Intel claims the processors affected are mostly implemented as closed systems, so they aren’t at risk from the Spectre exploit, and that the age of these processors means they have limited commercial availability. The processors which Intel won’t be patching include four lines from 2007, Penryn, Yorkfield, and Wolfdale, along with Bloomfield (2009), Clarksfield (2009), Jasper Forest (2010) and the Intel Atom SoFIA processors from 2015. According to Tom’s Hardware, Intel’s decision not to patch these products could stem from the relative difficulty of patching the Spectre exploit on older systems. “After a comprehensive investigation of the microarchitectures and microcode capabilities for these products, Intel has determined to not release microcode updates for these products,” Intel said. “Based on customer inputs, most of these products are implemented as “closed systems” and therefore are expected to have a lower likelihood of exposure to these vulnerabilities.” Because of the nature of the Spectre exploit, patches for it need to be delivered as an operating system or BIOS update, and if Microsoft and motherboard OEMs aren’t going to distribute the patches, developing them isn’t much of a priority. “However, the real reason Intel gave up on patching these systems seems to be that neither motherboard makers nor Microsoft may be willing to update systems sold a decade ago,” Tom’s Hardware reports. It sounds bad, but as Intel pointed out, these are all relatively old processors — with the exception of the Intel Atom SoFIA processor, which came out in 2015 — and it’s unlikely they’re used in any high-security environments. The Spectre exploit is a serious security vulnerability to be sure, but as some commentators have pointed out in recent months, it’s not the kind of exploit the average user needs to worry about. ““We’ve now completed release of microcode updates for Intel microprocessor products launched in the last 9+ years that required protection against the side-channel vulnerabilities discovered by Google Project Zero,” said an Intel spokesperson. “However, as indicated in our latest microcode revision guidance, we will not be providing updated microcode for a select number of older platforms for several reasons, including limited ecosystem support and customer feedback.” If you have an old Penryn processor toiling away in an office PC somewhere, you’re probably more at risk for a malware infection arising from a bad download than you are susceptible to something as technically sophisticated as the Spectre or Meltdown vulnerabilities. Source: Yahoo

-

-

Newsflash! Hold onto your hoodies! Mozilla, the makers of Firefox, is less than impressed with Facebook. Last week, Mozilla announced that it was suspending all of its Facebook advertising - citing concerns that the social network’s current default privacy settings are not protecting users well enough. And now Mozilla has announced a new Firefox add-on - called Facebook Container - that “isolates your Facebook identity from the rest of your web activity.” Here’s what Facebook Container does in Mozilla’s own words: Facebook Container works by isolating your Facebook identity into a separate container that makes it harder for Facebook to track your visits to other websites with third-party cookies. Installing this extension deletes your Facebook cookies and logs you out of Facebook. The next time you navigate to Facebook it will load in a new blue colored browser tab (the “Container”). You can log in and use Facebook normally when in the Facebook Container. If you click on a non-Facebook link or navigate to a non-Facebook website in the URL bar, these pages will load outside of the container. In addition, Facebook Container can block the Facebook social widgets and buttons that are distributed liberally across the internet, embedded on countless websites, that can silently track your surfing. In simple language, you can continue to use Facebook normally. And Facebook can continue to display advertising to you. But with Facebook Container installed, the social network will find it much more difficult to track any of your activity outside of Facebook. Which means less of those creepy targeted messages. You can read more details of how the Facebook Container browser extension works on the Firefox blog, but there’s at least one other important point to underline: Running Facebook Container doesn’t protect you from any of the data abuses detailed in the recent Cambridge Analytica debacle, which saw the profile data of some 50 million Facebook users fall into unauthorised hands. Equally, running Facebook Container is no replacement for the various other layers of protection you may wish to have in place when surfing online - such as a VPN, ad-blocking software, anti-virus, and Tor. But bravo to Mozilla. They know many Facebook users are concerned about better protecting their privacy and dislike some of the ways that the social network operates, but aren’t yet ready to make a clean break. For them, Facebook Container is a neat addition. The best solution of all, of course, is simply to quit Facebook permanently. Source: Graham Cluley

-

California burger chain In-N-Out Burger is not amused by YouTube prankster Cody Roeder, whose antics have included pretending to be the company’s CEO and telling a customer that their meal was “contaminated” and “garbage.” Roeder films pranks for his YouTube channel, Troll Munchies. You can see his prior pranks on that channel – the picking up girls/embarrassing Mom prank, “hilarious fart vape pen” and the like – but the In-N-Out videos posted two weeks ago have since been made private, according to the BBC. That’s likely because it’s gotten Roeder in a bit of a legal pickle. In-N-Out last week sought a restraining order against the prankster and his film crew. It also filed a lawsuit that claims that Roeder’s two recent pranks caused “significant and irreparable” harm to the chain. The suit seeks damages of more than $25,000. CBS Los Angeles, which featured some footage taken of Roeder’s pranks in its own news coverage, says that early last month, Roeder put on a dark suit, walked into an In-N-Out in Van Nuys, and claimed to be their CEO. “Hey, I’m your new CEO,” he said. “Just doing a little surprise visit.” Some of the employees believed him, but the manager wasn’t convinced. She asked him to step away from behind the counter, as Roeder told employees he wanted a cheeseburger and fries for a taste test. He left after employees called police. Roeder wasn’t done with the prank, though. The next day, he went to a Burbank In-N-Out, again claimed to be the new CEO, and this time demanded to talk to the manager about “contamination” of the food, saying: All of this is unsanitary, most of this is dog meat. Sir, sir, I hate to say this… but your food is contaminated. This is just, it’s garbage. He then told a customer that he needed to take his food. Then, he dropped the customer’s burger on the floor, said “It’s garbage,” and stepped on it. Employees again told him to leave. In-N-Out said in a statement that it won’t put up with people using the chain’s restaurants, employees and customers in their lust for social media fame: These visitors have unfortunately used deceit, fraud, and trespass to their own advantage, and in each instance, they have attempted to humiliate, offend or otherwise make our customers or associates uncomfortable. We believe that we must act now and we will continue to take action in the future to protect our customers and associates from these disruptions. This isn’t the first time that self-styled pranksters have gotten into serious trouble. In 2016, four members of the YouTube channel TrollStation – known then as the septic tank of prankster sites – were jailed for staging and filming fake robberies and kidnappings. Their aggressive and/or violent public antics have included trolls enacting brawls and smashing each other in the head with bottles made out of sugar. Last year, a couple in the US reportedly lost custody of two of their five children, whom they had filmed while screaming profanities at them, breaking their toys as a “prank” and blaming them for things they didn’t do. Some of the videos, posted to their DaddyOFive YouTube channel, showed the kids crying and being pushed, punched and slapped. In February, Australian YouTube prankster Luke Erwin was fined $1,200 for jumping off a 15-meter-high Brisbane bridge in the viral “silly salmon” stunt. US YouTube prankster Pedro Ruiz III was killed last year by his girlfriend and the mother of his children after insisting that she shoot a .50 caliber bullet through an encyclopedia he was holding in front of his chest. She’s now serving a 180-day jail term. Can YouTube stop this madness? YouTube is already making moves to regulate controversial videos. Last month, the site revealed that it’s planning to slap excerpts from Wikipedia and other websites onto pages containing videos about hoaxes and conspiracy theories, for one thing. But prank videos? They’re click-gold, and that’s apparently helping to keep them from being regulated. As Amelia Tait has described it for New Statesman, and as the YouTube channel Nerd City has , the enormously popular and insanely dangerous pranking culture shrugs off critics with catchphrases such as “it was a social experiment,” “block the haters,” and “It’s just a prank, bro.” Both the quest for fame and the profit to be made off ads on these prankster videos are causing an arms race to the bottom as shock-jocks try ever harder, more dangerous, more violent and more illegal stunts. These aren’t just pranks. These are videos in which people get hurt, or worse. Children get punched, tampons get coated in hot pepper, and their makers use the hashtag “funny”. In-N-Out Burger, we’re with you. We aren’t laughing either. Source: Sophos

-